Blog Post

Artificial Intelligence

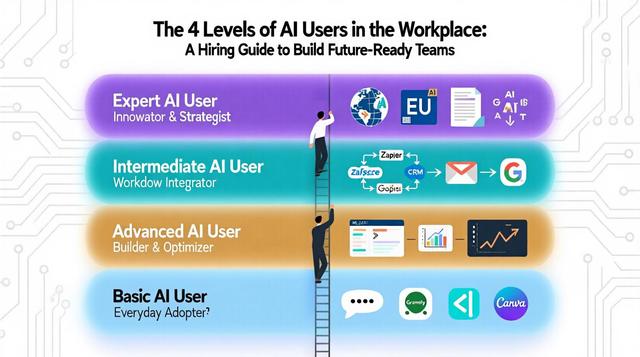

Future-Ready Teams: Hiring the 4 Levels of AI Users at Work

Published on Sep 11, 2025

by Jesus Montano

Why AI Literacy Is the New Core Skill

Artificial intelligence is no longer optional—it’s a fundamental workplace skill. From marketing automation to AI-driven HR screening, nearly every role touches AI tools.

But here’s the problem: not all AI users have the same proficiency. Some treat AI like a quick productivity hack, while others integrate it into workflows or even build new solutions.

For hiring managers and business leaders, understanding these AI literacy levels is critical. Misjudging a candidate’s skills can result in:

Wasted training costs,

Missed automation opportunities,

Compliance and ethical risks.

This guide breaks down the four levels of AI proficiency in the workplace, with examples and interview strategies to help you hire smarter.

The 4 Levels of AI Users in the Workplace (With Examples)

1. The Basic AI User (Everyday Adopter)

“I use AI tools but don’t know how they work.”

Who they are: Administrative staff, sales reps, customer service agents.

What they do:

Use ChatGPT to draft emails or summarize notes.

Run spell-check or AI grammar tools like Grammarly.

Rely on Canva’s AI features for quick design edits.

Ask Siri or Alexa for scheduling reminders.

Key traits:

Can use pre-built tools but can’t troubleshoot issues.

Little awareness of AI’s limitations.

Example: A support agent writes 50% of emails with AI but struggles when prompts produce confusing answers.

Why they matter: Boost productivity but require oversight to avoid mistakes.

2. The Intermediate AI User (Workflow Integrator)

“I connect AI tools to solve business problems.”

Who they are: Marketing managers, operations leads, HR coordinators, junior developers.

What they do:

Build no-code automations with Zapier or Make.com.

Fine-tune pre-trained AI models for business-specific use.

Engineer prompts for tailored, context-aware outputs.

Key traits:

Understands APIs and workflows.

Troubleshoots AI outputs logically.

Example: A marketing lead sets up an automated system to analyze campaign data and generate weekly reports—saving 10+ hours/month.

Why they matter: They transform AI into a scalable asset instead of a one-off tool.

3. The Advanced AI User (Builder & Optimizer)

“I train, optimize, and deploy AI models.”

Who they are: Data scientists, ML engineers, AI product leads.

What they do:

Fine-tune large language models for domain tasks.

Monitor model drift with MLOps platforms like MLflow.

Evaluate AI performance against KPIs.

Key traits:

Can explain precision/recall trade-offs.

Skilled in data quality and architecture.

Example: An engineer builds a fraud-detection model that reduces false positives by 35%.

Why they matter: Prevent costly AI failures and align AI systems with business goals.

4. The Expert AI User (Innovator & Strategist)

“I push the boundaries of what AI can do.”

Who they are: Research scientists, AI ethics officers, CTOs.

What they do:

Design new model architectures.

Lead AI governance and ethical reviews.

Publish research or contribute to open-source AI.

Key traits:

Balances innovation with risk.

Understands regulations (EU AI Act, NIST).

Example: A research director develops an AI system predicting supply chain risks using satellite + social media data.

Why they matter: Future-proof organizations and mitigate ethical risks.

How to Assess AI Skills in Job Candidates

For Basic Users

Ask: “How would you verify an AI-generated report?”

Test: Summarize a document with ChatGPT.

Watch for: Critical thinking, not blind trust.

For Intermediate Users

Ask: “How would you automate handling 500+ customer inquiries daily?”

Test: Design an AI-powered recruitment workflow.

Watch for: Error-handling and validation steps.

For Advanced Users

Ask: “How would you diagnose poor recommendation system performance?”

Test: Debug a flawed sentiment analysis model.

Watch for: Business alignment, not just technical brilliance.

For Experts

Ask: “What AI capability should we invest in next?”

Test: Ethical case study (e.g., bias in AI hiring).

Watch for: Strategic + ethical clarity.

3 Key Hiring Takeaways

Match AI level to the role: Don’t over-hire for advanced skills in admin jobs.

Spot the “AI illusion”: Ask for proof of real projects.

Test ethics as well as tech: Poor AI judgment can be costlier than poor code.

Why This 4-Level Framework Works

Avoids the “AI or nothing” trap: Not every role needs a PhD in AI.

Supports democratization: Basic AI literacy is now expected, but structured assessments prevent errors.

Stat: 72% of hiring managers say AI skills are critical outside tech teams (Gartner, 2024), yet only 28% test for them.

Your Next Steps

Audit your team’s AI usage against the 4 levels.

Add AI proficiency tiers to job descriptions.

Train interviewers to test AI problem-solving, not just knowledge.

Pro tip: Start with prompt engineering + AI output validation—the two most impactful skills for non-tech roles.

Recommended Resources

Final Thoughts

The goal isn’t to turn every employee into a data scientist. It’s to ensure every role harnesses AI responsibly and effectively.